Databricks case studies

Role: Copywriter

Project Type: Marketing success stories

Databricks is a data intelligence program that maximizes an organization’s potential for data and AI. I was hired by Seattle-based agency Red Door Collaborative to write three customer stories that highlight Databricks’ capabilities as it pertains to two of its clients, Volvo and AnyClip. Both are reprinted below.

Enabling real-time distribution of 700K+ spare parts worldwide

www.databricks.com/customers/volvo/lakeflow-declarative-pipelines

“Using Spark Declarative Pipelines, now with just a simple click, we can effortlessly transition our metadata scheduling from triggered to continuous and back again, all without the need to dive into complex code, which saves us a considerable amount of time.” - Bruno Magri, Senior Data Engineer, Volvo Group Service Market Logistics

The Volvo Group Service Market Logistics (SML) team manages and distributes a massive spare parts inventory for Volvo Group worldwide across the entire chain, from supplier to truck dealer. With roughly 200,000 new Volvo trucks sold yearly (and millions more on the road) and hundreds of thousands of spare parts spread across warehouses globally, keeping track of every spare part — and ensuring each one arrives on time at the dealership that needs it — is daunting. Following the implementation of the Databricks Data Intelligence Platform and Spark Declarative Pipelines, the team has visibility into how and where to stock their inventory for immediate needs and predictive scenarios.

Siloed data systems put the entire supply chain at risk

When trucks break down, deliveries get delayed, supply chains slip and consumers and manufacturers feel the pinch. “Our main target is to have spare parts available close to our customers,” said Ingmar Rogiers, Digital Product Manager at Volvo SML. “That’s what SML is doing to secure good service for our customers.”

Knowing what spare parts are where and even predicting what should be where is critical to the flow of the entire Volvo SML system. But hitting that target wasn’t always easy. Before their Databricks implementation, Volvo’s data stack was usable but had room for improvement. Years of mergers and acquisitions created a scattered application landscape containing several legacy systems. Instead of being managed from a centralized hub, inventory planning occurred individually, warehouse to warehouse, with limited visibility into what resources could be shared.

“We had a great opportunity to improve the integrated planning and coordination,” explained Rogiers.

The Volvo SML team needed a central platform to gather and integrate all their data sources. They knew that if they could steer their operations from that platform, monumental gains were just around the corner.

Improving systems to near real-time data ingestion and automation

During the discovery phase of Volvo SML’s cloud journey, they experimented with other solutions, including Azure Data Factory (ADF). However, they quickly realized that the Databricks solution was the only way forward for their unique needs.

The first step in the transformation was to adopt the Databricks Data Intelligence Platform. This critical first move brought Volvo’s previously separate spare parts data sources together under a single, unified platform.

From there, the team deployed Databricks Spark Declarative Pipelines and Databricks Lakeflow Jobs to ingest and process Volvo’s huge amounts of data in near real-time, a new capability for the organization. It was a game changer and the primary reason for adopting Spark Declarative Pipelines and Lakeflow Jobs.

“That’s where it all started, because a planning and supply-chain business is very fast moving,” said Rogiers. “Your data is quickly outdated. So the expectation is that every minute counts.”

What once was triggered in minutes could now be triggered in mere seconds. “With just a simple click, we can effortlessly transition our metadata scheduling from triggered to continuous and back again, all without the need to dive into complex code, which saves us a considerable amount of time,“ recounted Bruno Magri, Senior Data Engineer at Volvo SML.

Armed with these new capabilities, the team then rolled out the Spark Declarative Pipelines automated operation features to bolster processes and improve efficiency around routine tasks, including automatic checkpointing, background maintenance, table optimizations, infrastructure autoscaling and more.

“Now we don’t need to worry because of the optimizations and maintenance jobs that run in the background,” offered Bruno. “If you need to refresh the table you can do it with a few clicks. Whether it’s data type chains or schema chains, Databricks Spark Declarative Pipelines can handle those things almost automatically. It’s a nice feature.”

For data orchestration, the team switched from ADF to Databricks Lakeflow Jobs. “Lakeflow Jobs has been a great orchestrator for us,” continued Bruno. “We can query all the data using database APIs and build a monitoring report to see if a job is failing, how much time it’s taking on average and if it’s taking more than the average for that job.”

Benefits beyond inventory visibility

Since the Spark Declarative Pipelines deployment, Volvo has realized new capabilities and efficiencies, from global reporting and end-to-end order tracking to real-time inventory processing. The Volvo SML team now has unprecedented access to automated operations, autoscaling and the unifying benefits of the Databricks Data Intelligence Platform built on lakehouse architecture.

“Today, we get an integrated view of where spare parts are, the value of spare parts across warehouses and the potential costs involved in shipping parts from warehouse to warehouse or dealers,” touted Rogiers.

Real-time data ingestion and processing also helps Volvo SML plan for expansion. “We have a task in our organization that we call ‘footprint design,’ where we identify where our next warehouse should be located or which warehouse should be responsible for which particular markets and brands,” added Rogiers.

With up to 40% efficiency gains across many routine database tasks, the Volvo SML team can now look to the future with confidence, knowing Databricks Spark Declarative Pipelines and Lakeflow Jobs are helping make a major difference across the organization.

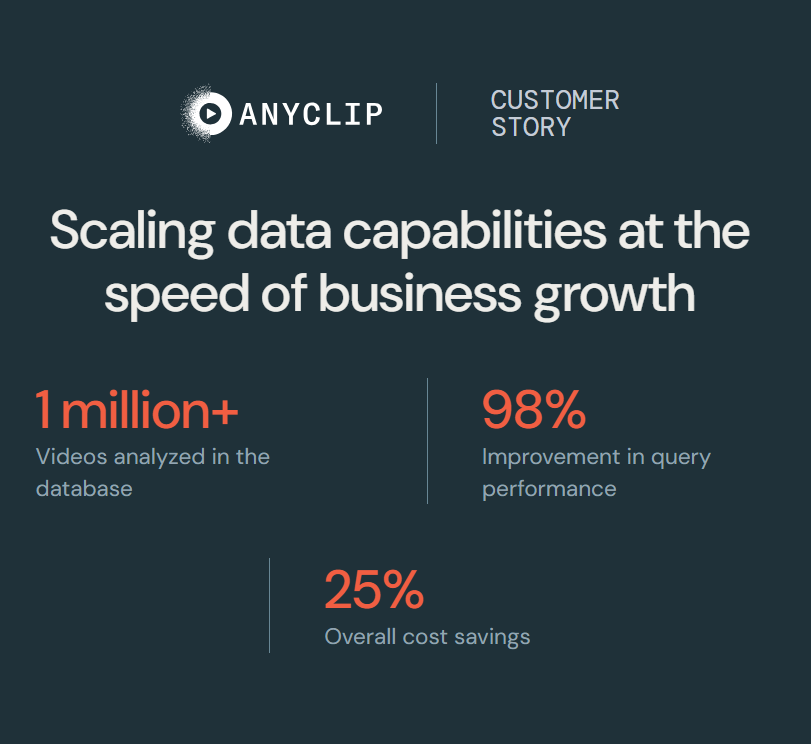

Scaling data capabilities at the speed of business growth

www.databricks.com/customers/anyclip

“Overall the cost of Databricks SQL compared to Amazon Redshift is close to 25% cheaper and the performance is better. And now with Databricks SQL, our system runs 50% faster.” - Gal Doron, Head of Data, AnyClip

AnyClip is a visual intelligence company whose AI-powered platform turns traditional video into interactive content that is fully searchable, measurable, personalized and monetized for consumer and corporate audiences. AnyClip’s client roster includes Fortune 500 companies with huge video libraries, which AnyClip tools analyze frame by frame, recognizing people, brands and topics among other data points — all of which are stored and processed for reporting and querying. As the company experienced rapid growth, the amount of data, and its history, proved too voluminous for their initial Amazon Redshift data stack to handle, leading to painfully slow data query times and an unstable environment. After evaluating Snowflake, BigQuery and Databricks SQL, AnyClip migrated from Redshift to Databricks SQL to solve these and other scaling issues. Today the system is stable and highly responsive, leading to easy and unlimited report gathering, hyperefficient workflows and faster and better performance insights.

Time-consuming data queries lead to frustration

AnyClip’s innovative AI platform transforms how video is produced and consumed around the globe. Every video AnyClip receives is analyzed to the millisecond. The data is then presented to internal and external stakeholders via customized dashboards that reveal overall performance, audience engagement, revenue tracking and a plethora of other viewership metrics. That data can then be analyzed or further queried to drive future content strategies and video investment decisions.

Accomplishing this wasn’t always easy. With the previous data stack using Redshift, queries yielded widespread frustration rather than insights. “People would write to me and say things like, ‘What’s going on?’” explained Gal Doron, Head of Data at AnyClip. “‘Why am I not getting any results for my query? It’s just running endlessly.’”

Doron understood the frustration firsthand. He and his team were mired in their own manual tasks — like rewriting tables due to rogue data arriving late or from disparate sources — which sometimes brought the system to a halt. All while trying to sustain a toolset that worked adequately when the company was smaller but proved insufficient as AnyClip experienced rapid growth.

With more videos arriving daily — total video inventory is now in the millions — costs and inefficiency were growing. “We very quickly got to a point where we had two options: Either scale enormously in terms of machines in Redshift, which would double and triple our costs, or find a different solution,” said Doron. “That’s when I started looking, and that’s where Databricks hugely solved that issue.”

Making data work for end users

In their hunt for a new solution, the AnyClip team quickly realized that leveraging materialized views in Databricks SQL could address their most pressing issues and transform data querying from a tedious task into a simple and empowering activity.

Materialized views are a data warehousing construct that store the results of a precomputed query. By precomputing results, materialized views accelerate SQL analytics and BI reports while also reducing costs. Databricks SQL materialized views are especially powerful because they’re built on Spark Declarative Pipelines, bringing query incrementalization and data engineering best practices into the world of data warehousing.

Using materialized views in Databricks SQL had immediate and profound impacts for AnyClip. “We’ve seen query performances improve by 98% with some of our tables that have several terabytes of data,” affirmed Doron. “Previously when users tried to run queries, it could take hours. With Databricks, it takes something between half a minute to three minutes.”

Another example is how the rush for reporting on Monday mornings, when everyone comes back from the weekend and wants the latest data, has changed. “All the reports were scheduled for the exact same time, and previously, everything got stuck,” Doron related. “Now it’s one hundred percent more stable in terms of performance.”

Perhaps most notable, Doron no longer worries about the data stack keeping pace with business growth. “Databricks hugely solved that issue,” he proclaimed. “Sometimes I have very small data models and I don’t need to scale there. But when I have a huge data model, I can scale and it’ll be much cheaper — overall about 25% cheaper and close to 50% faster — and very accurate. I don’t need to scale the whole warehouse. I can just scale in one place.”

Delta Lake Liquid Clustering, a data layout technique that replaces table partitioning and ZORDER, also helped AnyClip with query performance. According to Doron, the team saw “orders of magnitude improvement in performance with Liquid Clustering” versus the traditional approach of partitioning plus ZORDER as they migrated data warehouse and analytics workloads from Redshift to Databricks SQL.

Putting power in the hands of stakeholders

Doron runs a lean engineering team. Therefore, his business users, both internal and external, are the de facto data analysts. With Databricks, Doron can let curiosity guide his team. “If they need a report, they just create it,” he stated. “They’re doing it on their own and we’re seeing some amazing stuff.”

For example, a vice president of marketing recently decided to analyze election videos to determine if audience engagement changed when female candidates were on screen versus male candidates. “She did it alone — a whole dashboard with comparison charts and graphs and more,” Doron noted. “That’s how easy it is for our business users who are using my system.”

Ultimately, Doron knows the new system is doing its job because he’s not receiving any of the complaints he used to. “It’s much quieter now,” he shared.